One of the goals of data architecture is to make data easy to access and use. Another important goal is to encourage the reuse of data and processes. Both goals are supported by data connectors for the data lake. Unfortunately, data lake connectors are not commercially available today, so you’ll need to build your own, but the benefits of doing so are obvious. Among the most important are fostering reuse, streamlining work, and improving quality and consistency.

Standard Data Connectors

Data connectors are stand-alone software components to export data from a data source, sometimes converting the data from one format to another. Data connectors are often implemented as APIs and sometimes as services.

Data connectors are widely available. You can find connectors for SaaS applications such as Salesforce, QuickBooks, Workday, WooCommerce, HubSpot, and many more. Connectors are available for social media data sources including Facebook, Twitter, LinkedIn, YouTube, etc. Database connectors for RedShift, Big Query, and many relational databases are available. It seems that connectors are available for nearly every data source. But they are used almost exclusively for data lake ingestion, data acquisition for warehousing, and data sourcing for data mashups. Connectors are used frequently to put data into data lakes and data warehouses. But what about getting data out? What about the data analysts and data scientists who need to get data from data lakes and data warehouses? Shouldn’t they have connectors too? All of the connectors described above are commercially available software components. The primary use case is moving data from one database to another. The connectors can be generalized and standardized because their purpose is to access a defined set of data and deliver it in its native form. Data knowledge is embedded in the connectors. For example, a QuickBooks connector knows the format, structure, and access protocols for the QuickBooks database.

The Need for Data Lake Connectors

Data connectors for the data lake have different requirements. They need to access defined, but frequently changing, sets of data and deliver it in the form that is needed by the data consumer. The wide variety of data in a data lake makes it impractical to embed knowledge of data directly into connectors. It is more practical to externalize that knowledge as metadata. Delivering data in the form that is needed by the consumer requires that the data can be reorganized before it is delivered.

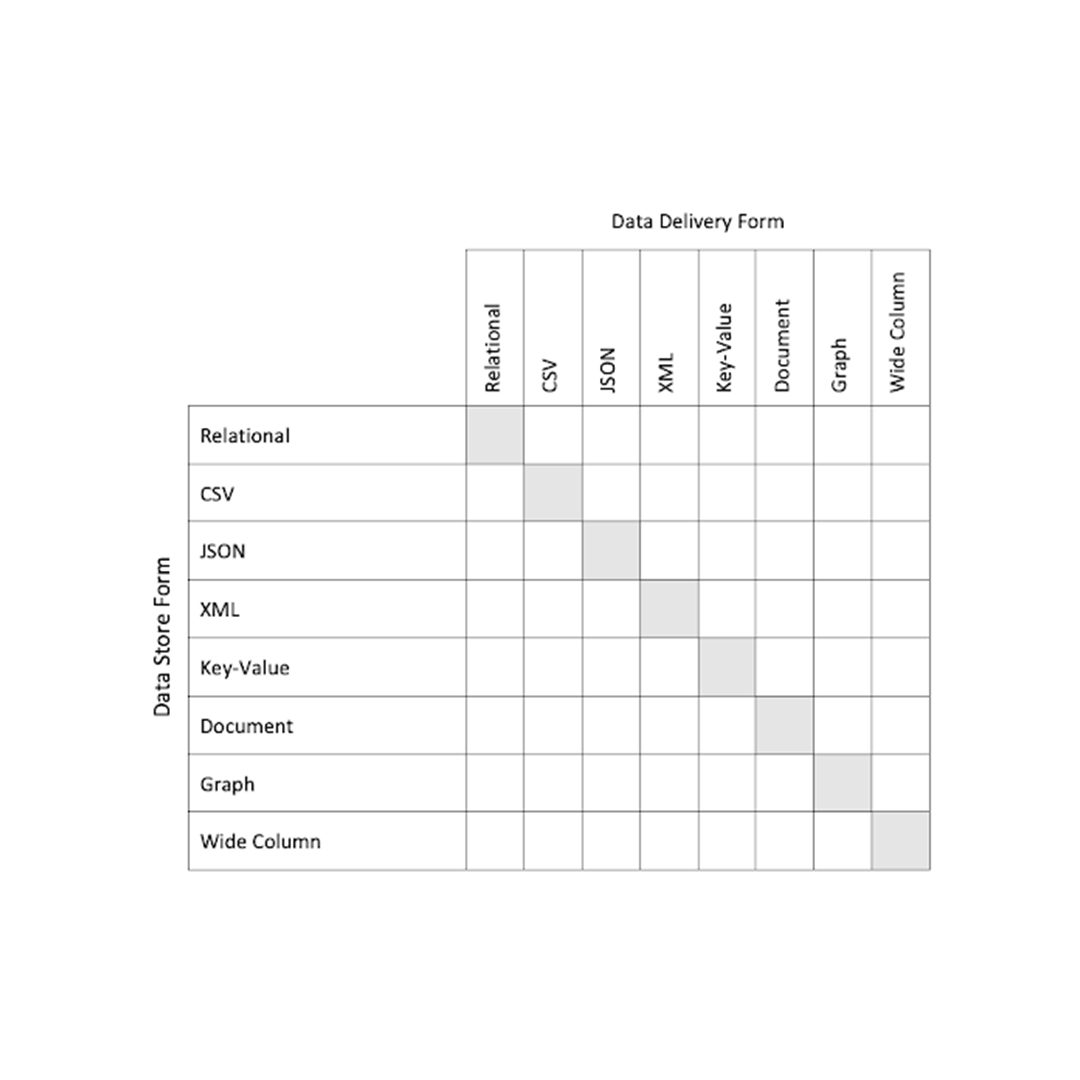

Imagine, for example, a data scientist who wants to access data stored as a JSON file but receive data formatted as rows and columns. Inversely, they may want to access data from relational tables and have it delivered in JSON format. These are but two examples of many possibilities as shown in the table below.

Table 1. Data Storage and Delivery Forms

The table shows 64 combinations of data store and data delivery forms, with 56 of them changing the form of the data before delivery. That’s a lot of data transformation that can be packaged in reusable connectors. The ability to change the form of data at the time of access fits neatly with the concept of schema-on-read. A Data Lake Connector Example To make the data lake connector concept more tangible, let’s take a quick look at how one connector might be built. Every data lake connector is unique, so this is simply one example to illustrate the concept. Recall the example of a data scientist who wants to access data stored as a JSON file but receive data formatted as rows and columns. The data connector to meet this need must know to what columns the fields in the JSON file are mapped. To do that it must be aware of all of the JSON tags for every record in the file, the maximum size of data associated with each tag, and the implied data type for each tag. This knowledge is ideally captured as metadata at the time of data ingestion. You may want to capture additional metadata such as tagging of personally identifiable information or other sensitive data types. At the time of data access, the connector parses each record to determine what fields are contained in the record and maps each into the column structure defined by the metadata. It may include data transformations such as obfuscation of sensitive data. The API or service communications protocol may also allow additional features such as filtering or sampling the set of data. The Benefits of Reusable Connectors From the early years of software development, we’ve known the benefits of reusable components. They accelerate development, and they improve the quality, consistency, and maintainability of software systems. Reusable data lake connectors bring these same benefits to the data engineers, data analysts, and data scientists who access the data lake to develop data pipelines and data analytics.

Building in Data Protection

Every data access process is an opportunity to automate some data governance controls. When personally identifiable information (PII), privacy-sensitive data, or security-sensitive data in the data lake are tagged with metadata, data lake connectors should include data protection functions. Processes using data lake connectors to access datasets that contain sensitive data can be required to show a token to authorize access. Without authorization, the connector should automatically obfuscate sensitive data or remove it from the delivered dataset.

Tracking Data Access

Data lake connectors can be designed with a metadata collection function to track data access. Data management is a complex job. Knowledge about data access and usage helps to manage the data effectively. Connectors can capture metadata to answer questions such as: Which data is being used? When and how frequently is it used? In which forms is it stored and in which forms is it accessed?

Final Thoughts

Data lake connectors are a concept that makes sense. They are not commercially available today. (Yes, Microsoft has the Azure Data Lake connector but it’s not quite the same thing.) You’ll need to build your own, but the benefits of doing so should be obvious. Much of this processing is being built in your organization already—but it’s being built repeatedly and inconsistently. Building data connectors that are reused speeds development and improves quality and consistency—all with the added benefits of governance and tracking capabilities. This is a relatively small part of the large and complex field of data management architecture, but one with the potential to make a substantial difference for your data analysts, data scientists, and data engineers. Data architects, I encourage you to step up and take the lead.